Fortunately, higher quality, more secure alternatives are available here at home

By Peter Kant, CEO, Enabled Intelligence

Recent media reports in the Washington Post and elsewhere have detailed how Scale AI, a provider of AI to the US Department of Defense and other US government agencies, relies on low paid, foreign gig-workers to perform the critical work of labeling and annotating data used in AI models.

According to these reports, Scale AI, which received $78M US Army contract for AI-related work, often does not perform the critical task of data annotation itself. Instead, when it wins a contract to create AI training data, it outsources this work to another company – which Scale AI owns — called Remotasks.

According to Remotasks’ website, it has approximately 240,000 gig workers or “taskers” spread out over 90 countries such as Burundi and the Philippines. According to the Washington Post, these foreign workers annotate data at home, or in crowded offices or internet cafes – and many of them claim that they are paid poorly, with little regard to basic labor standards.

This is bad news – and not just for Scale AI. The reporting – which to be fair, Scale AI disputes — raises the question, “Do all AI companies use Scale’s approach? Is relying on low paid foreign workers the price we must pay for world-class AI technology?”

Another question raised by the use of foreign labor by companies such as Scale AI: how are they safeguarding the public data they are annotating through their US government contracts? It’s hard to tell from websites and marketing materials! Clearly, the US government does not want foreign workers who are based overseas handling sensitive information as part of an AI government contract. And, I doubt, do many commercial companies with sensitive customer data and valuable intellectual property to safeguard. To date, however, there has been limited government oversight focused on this issue. Policy makers are starting to look into these issues and ask the right questions. But policy leadership must move faster.

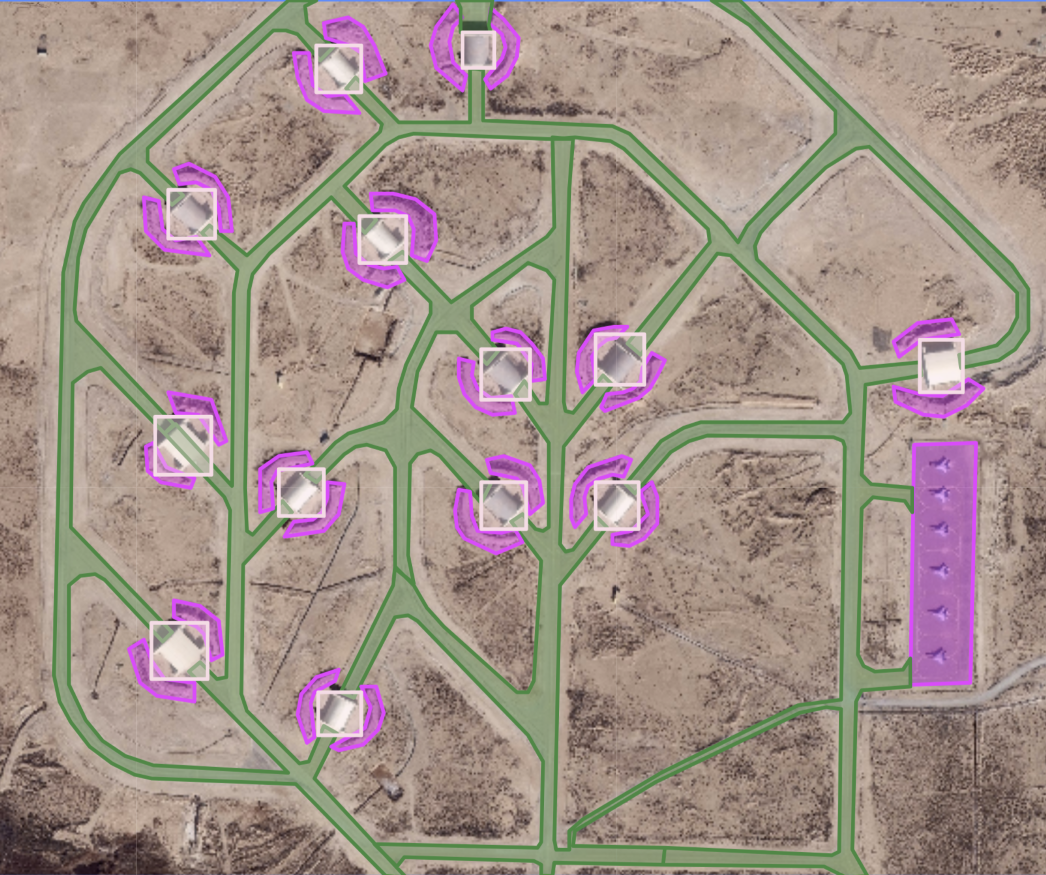

Fortunately for workers and for national security, Scale AI’s approach is not representative of the entire US AI industry. For example, Enabled Intelligence is not only headquartered in the USA, but all the work we do is done in the USA, at our secure facility – by our full time employees, all of whom are US citizens, and many of whom are veterans. We are not alone. We have many partners and competitors who are passionate about data privacy and data security – and delivering high quality AI solutions to our US government customers. Let’s hope some of these firms are profiled soon in the Washington Post and elsewhere.

At a time when the public is skeptical about “big tech” and US taxpayer dollars are flowing to companies like Scale, it is critical for our economy and for national security to ensure the data used to “teach” AI technology is unbiased, high quality, and secure. We need bipartisan support for investments in AI and for the responsible development and use of AI. Poor quality AI data created by underpaid, overworked, and poorly trained workers in “data sweatshops” will erode any public acceptance of AI technology and only helps our economic competitors and adversaries.

Peter Kant is the Founder and CEO of Enabled Intelligence, Inc, an artificial intelligence technology company providing accurate data labeling and AI technology development. He has held leadership positions in the US federal government, non-profit research organizations, and been CEO of multiple technology companies.